Sqoop安装配置及演示

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。Sqoop目前已经是Apache的顶级项目了,

Sqoop是一个用来将Hadoop(Hive、HBase)和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如:MySQL ,Oracle ,Postgres等)中的数据导入到Hadoop的HDFS中,也可以将HDFS的数据导入到关系型数据库中。 Sqoop目前已经是Apache的顶级项目了,目前版本是1.4.4 和 Sqoop2 1.99.3,本文以1.4.4的版本为例讲解基本的安装配置和简单应用的演示。- 安装配置

- 准备测试数据

- 导入数据到HDFS

- 导入数据到Hive

- 导入数据到HBase

sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz

1.1、下载后解压配置:

tar -zxvf sqoop-1.4.4.bin__hadoop-2.0.4-alpha.tar.gz /usr/local/ cd /usr/local ln -s sqoop-1.4.4.bin__hadoop-2.0.4-alpha sqoop

vi ~/.bash_profile :

#Sqoop add by micmiu.com export SQOOP_HOME=/usr/local/sqoop export PATH=$SQOOP_HOME/bin:$PATH

vi ?<sqoop_home>/conf/sqoop-env.sh</sqoop_home>

# 指定各环境变量的实际配置 # Set Hadoop-specific environment variables here. #Set path to where bin/hadoop is available #export HADOOP_COMMON_HOME= #Set path to where hadoop-*-core.jar is available #export HADOOP_MAPRED_HOME= #set the path to where bin/hbase is available #export HBASE_HOME= #Set the path to where bin/hive is available #export HIVE_HOME=

# Hadoop

export HADOOP_PREFIX="/usr/local/hadoop"

export HADOOP_HOME=${HADOOP_PREFIX}

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

# Native Path

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib/native"

# Hadoop end

#Hive

export HIVE_HOME=/usr/local/hive

export PATH=$HIVE_HOME/bin:$PATH

#HBase

export HBASE_HOME=/usr/local/hbase

export PATH=$HBASE

#add by micmiu.com<sqoop_home>/lib</sqoop_home> 目录下。

[二]、测试数据准备

以MySQL 为例:

- 192.168.6.77(hostname:Master.Hadoop)

- database: test

- 用户:root 密码:micmiu

CREATE TABLE `demo_blog` ( `id` int(11) NOT NULL AUTO_INCREMENT, `blog` varchar(100) NOT NULL, PRIMARY KEY (`id`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE `demo_log` ( `operator` varchar(16) NOT NULL, `log` varchar(100) NOT NULL ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

insert into demo_blog (id, blog) values (1, "micmiu.com");

insert into demo_blog (id, blog) values (2, "ctosun.com");

insert into demo_blog (id, blog) values (3, "baby.micmiu.com");

insert into demo_log (operator, log) values ("micmiu", "create");

insert into demo_log (operator, log) values ("micmiu", "update");

insert into demo_log (operator, log) values ("michael", "edit");

insert into demo_log (operator, log) values ("michael", "delete");sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 09:58:43 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 09:58:43 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 09:58:43 INFO tool.CodeGenTool: Beginning code generation 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 09:58:43 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 09:58:44 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/e8fd26a5bca5b7f51cdb03bf847ce389/demo_blog.jar 14/04/09 09:58:44 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 09:58:44 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 09:58:44 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 09:58:44 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 09:58:44 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 09:58:44 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 09:58:45 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 09:58:45 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 09:58:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 09:58:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 09:58:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 09:58:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0001 14/04/09 09:58:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0001 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 09:58:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0001/ 14/04/09 09:58:47 INFO mapreduce.Job: Running job: job_1396936838233_0001 14/04/09 09:59:00 INFO mapreduce.Job: Job job_1396936838233_0001 running in uber mode : false 14/04/09 09:59:00 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 09:59:14 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 09:59:16 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 09:59:19 INFO mapreduce.Job: Job job_1396936838233_0001 completed successfully 14/04/09 09:59:19 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271866 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=43032 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=590 CPU time spent (ms)=6330 Physical memory (bytes) snapshot=440934400 Virtual memory (bytes) snapshot=3882573824 Total committed heap usage (bytes)=160563200 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 34.454 seconds (1.2771 bytes/sec) 14/04/09 09:59:19 INFO mapreduce.ImportJobBase: Retrieved 3 records.

$ hdfs dfs -ls /user/hadoop/demo_blog Found 4 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 09:59 /user/hadoop/demo_blog/_SUCCESS -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00000 -rw-r--r-- 3 hadoop supergroup 13 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00001 -rw-r--r-- 3 hadoop supergroup 18 2014-04-09 09:59 /user/hadoop/demo_blog/part-m-00002 [hadoop@Master ~]$ hdfs dfs -cat /user/hadoop/demo_blog/part-m-0000* 1,micmiu.com 2,ctosun.com 3,baby.micmiu.com

/user/username/tablename/(files),比如我的当前用户是hadoop,那么实际路径即:?/user/hadoop/demo_blog/(files)。

如果要自定义路径需要增加参数:--warehouse-dir 比如:

sqoop import --connect jdbc:mysql://Master.Hadoop/test --username root --password micmiu --table demo_blog --warehouse-dir /user/micmiu/sqoop

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator

--split-by xxx ?或者 -m 1

执行过程:

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_log --warehouse-dir /user/micmiu/sqoop --split-by operator Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 15:02:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 15:02:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 15:02:06 INFO tool.CodeGenTool: Beginning code generation 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_log` AS t LIMIT 1 14/04/09 15:02:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 15:02:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/dddc1bcdba30515f95a2d604f22e4fe9/demo_log.jar 14/04/09 15:02:07 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 15:02:07 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 15:02:07 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 15:02:07 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 15:02:07 INFO mapreduce.ImportJobBase: Beginning import of demo_log SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 15:02:07 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 15:02:08 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 15:02:08 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`operator`), MAX(`operator`) FROM `demo_log` 14/04/09 15:02:10 WARN db.TextSplitter: Generating splits for a textual index column. 14/04/09 15:02:10 WARN db.TextSplitter: If your database sorts in a case-insensitive order, this may result in a partial import or duplicate records. 14/04/09 15:02:10 WARN db.TextSplitter: You are strongly encouraged to choose an integral split column. 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: number of splits:4 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 15:02:10 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 15:02:10 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 15:02:10 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 15:02:10 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0003 14/04/09 15:02:10 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0003 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 15:02:10 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0003/ 14/04/09 15:02:10 INFO mapreduce.Job: Running job: job_1396936838233_0003 14/04/09 15:02:17 INFO mapreduce.Job: Job job_1396936838233_0003 running in uber mode : false 14/04/09 15:02:17 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 15:02:28 INFO mapreduce.Job: map 25% reduce 0% 14/04/09 15:02:30 INFO mapreduce.Job: map 50% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 15:02:33 INFO mapreduce.Job: Job job_1396936838233_0003 completed successfully 14/04/09 15:02:33 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=362536 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=516 HDFS: Number of bytes written=56 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=44481 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=4 Map output records=4 Input split bytes=516 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=429 CPU time spent (ms)=6650 Physical memory (bytes) snapshot=587669504 Virtual memory (bytes) snapshot=5219356672 Total committed heap usage (bytes)=205848576 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=56 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Transferred 56 bytes in 25.2746 seconds (2.2157 bytes/sec) 14/04/09 15:02:33 INFO mapreduce.ImportJobBase: Retrieved 4 records.

$ hdfs dfs -ls /user/micmiu/sqoop/demo_log Found 5 items -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/_SUCCESS -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00000 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00001 -rw-r--r-- 3 hadoop supergroup 0 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00002 -rw-r--r-- 3 hadoop supergroup 28 2014-04-09 15:02 /user/micmiu/sqoop/demo_log/part-m-00003 $ hdfs dfs -cat /user/micmiu/sqoop/demo_log/part-m-0000* michael,edit michael,delete micmiu,create micmiu,update

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --warehouse-dir /user/sqoop --hive-import --create-hive-table Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 14/04/09 10:44:21 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 14/04/09 10:44:21 WARN tool.BaseSqoopTool: It seems that you've specified at least one of following: 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-home 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-overwrite 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --create-hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-table 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-key 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --hive-partition-value 14/04/09 10:44:21 WARN tool.BaseSqoopTool: --map-column-hive 14/04/09 10:44:21 WARN tool.BaseSqoopTool: Without specifying parameter --hive-import. Please note that 14/04/09 10:44:21 WARN tool.BaseSqoopTool: those arguments will not be used in this session. Either 14/04/09 10:44:21 WARN tool.BaseSqoopTool: specify --hive-import to apply them correctly or remove them 14/04/09 10:44:21 WARN tool.BaseSqoopTool: from command line to remove this warning. 14/04/09 10:44:21 INFO tool.BaseSqoopTool: Please note that --hive-home, --hive-partition-key, 14/04/09 10:44:21 INFO tool.BaseSqoopTool: hive-partition-value and --map-column-hive options are 14/04/09 10:44:21 INFO tool.BaseSqoopTool: are also valid for HCatalog imports and exports 14/04/09 10:44:21 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 10:44:21 INFO tool.CodeGenTool: Beginning code generation 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:21 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 10:44:22 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/c071f02ecad006293202fd2c2fad0dce/demo_blog.jar 14/04/09 10:44:22 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 10:44:22 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 10:44:22 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 10:44:22 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 10:44:22 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:22 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 10:44:23 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 10:44:23 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 10:44:25 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir 14/04/09 10:44:25 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 10:44:25 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 10:44:25 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0002 14/04/09 10:44:25 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0002 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 10:44:25 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0002/ 14/04/09 10:44:25 INFO mapreduce.Job: Running job: job_1396936838233_0002 14/04/09 10:44:33 INFO mapreduce.Job: Job job_1396936838233_0002 running in uber mode : false 14/04/09 10:44:33 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 10:44:46 INFO mapreduce.Job: map 67% reduce 0% 14/04/09 10:44:48 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 10:44:49 INFO mapreduce.Job: Job job_1396936838233_0002 completed successfully 14/04/09 10:44:49 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=271860 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=44 HDFS: Number of read operations=12 HDFS: Number of large read operations=0 HDFS: Number of write operations=6 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=34047 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=505 CPU time spent (ms)=5350 Physical memory (bytes) snapshot=427388928 Virtual memory (bytes) snapshot=3881439232 Total committed heap usage (bytes)=171638784 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=44 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Transferred 44 bytes in 26.0401 seconds (1.6897 bytes/sec) 14/04/09 10:44:49 INFO mapreduce.ImportJobBase: Retrieved 3 records. 14/04/09 10:44:49 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 10:44:49 INFO hive.HiveImport: Loading uploaded data into Hive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize 14/04/09 10:44:52 INFO hive.HiveImport: 14/04/09 10:44:52 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 WARN conf.HiveConf: DEPRECATED: hive.metastore.ds.retry.* no longer has any effect. Use hive.hmshandler.retry.* instead 14/04/09 10:44:53 INFO hive.HiveImport: 14/04/09 10:44:53 INFO hive.HiveImport: Logging initialized using configuration in file:/usr/local/hive-0.13.0-bin/conf/hive-log4j.properties 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 14/04/09 10:44:53 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.773 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Loading data to table default.demo_blog 14/04/09 10:44:57 INFO hive.HiveImport: Table default.demo_blog stats: [numFiles=4, numRows=0, totalSize=44, rawDataSize=0] 14/04/09 10:44:57 INFO hive.HiveImport: OK 14/04/09 10:44:57 INFO hive.HiveImport: Time taken: 0.25 seconds 14/04/09 10:44:57 INFO hive.HiveImport: Hive import complete. 14/04/09 10:44:57 INFO hive.HiveImport: Export directory is empty, removing it

hive> show tables; OK demo_blog hbase_table_1 hbase_table_2 hbase_table_3 micmiu_blog micmiu_hx_master pokes xflow_dstip Time taken: 0.073 seconds, Fetched: 8 row(s) hive> select * from demo_blog; OK 1 micmiu.com 2 ctosun.com 3 baby.micmiu.com Time taken: 0.506 seconds, Fetched: 3 row(s)

sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url

$ sqoop import --connect jdbc:mysql://192.168.6.77/test --username root --password micmiu --table demo_blog --hbase-table demo_sqoop2hbase --hbase-create-table --hbase-row-key id --column-family url Warning: /usr/lib/hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. 14/04/09 16:23:38 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 14/04/09 16:23:38 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 14/04/09 16:23:38 INFO tool.CodeGenTool: Beginning code generation 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `demo_blog` AS t LIMIT 1 14/04/09 16:23:39 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop Note: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 14/04/09 16:23:40 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/85408c854ee8fba75bbb2458e5e25093/demo_blog.jar 14/04/09 16:23:40 WARN manager.MySQLManager: It looks like you are importing from mysql. 14/04/09 16:23:40 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 14/04/09 16:23:40 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 14/04/09 16:23:40 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 14/04/09 16:23:40 INFO mapreduce.ImportJobBase: Beginning import of demo_blog SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.2.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hbase-0.98.0-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 14/04/09 16:23:40 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:host.name=Master.Hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.version=1.6.0_20 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Sun Microsystems Inc. 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.home=/java/jdk1.6.0_20/jre 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop/etc/hadoop: ....... 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/hadoop/lib/native 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:java.compiler= 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-71.el6.x86_64 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.name=hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Client environment:user.dir=/home/hadoop 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave5.Hadoop/192.168.8.205:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave5.Hadoop/192.168.8.205:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave5.Hadoop/192.168.8.205:2181, sessionid = 0x453fecb6c50009, negotiated timeout = 90000 14/04/09 16:23:41 INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:41 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50008, negotiated timeout = 90000 14/04/09 16:23:41 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50008 closed 14/04/09 16:23:41 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:41 INFO mapreduce.HBaseImportJob: Creating missing HBase table demo_sqoop2hbase 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x57c8b24d, quorum=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181, baseZNode=/hbase 14/04/09 16:23:42 INFO zookeeper.RecoverableZooKeeper: Process identifier=catalogtracker-on-hconnection-0x57c8b24d connecting to ZooKeeper ensemble=Slave6.Hadoop:2181,Slave5.Hadoop:2181,Slave7.Hadoop:2181 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Opening socket connection to server Slave7.Hadoop/192.168.8.207:2181. Will not attempt to authenticate using SASL (Unable to locate a login configuration) 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Socket connection established to Slave7.Hadoop/192.168.8.207:2181, initiating session 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: Session establishment complete on server Slave7.Hadoop/192.168.8.207:2181, sessionid = 0x2453fecb6f50009, negotiated timeout = 90000 14/04/09 16:23:42 INFO zookeeper.ZooKeeper: Session: 0x2453fecb6f50009 closed 14/04/09 16:23:42 INFO zookeeper.ClientCnxn: EventThread shut down 14/04/09 16:23:42 INFO client.RMProxy: Connecting to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `demo_blog` 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: number of splits:3 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files 14/04/09 16:23:47 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class 14/04/09 16:23:47 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir 14/04/09 16:23:47 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1396936838233_0005 14/04/09 16:23:47 INFO impl.YarnClientImpl: Submitted application application_1396936838233_0005 to ResourceManager at Master.Hadoop/192.168.6.77:8032 14/04/09 16:23:47 INFO mapreduce.Job: The url to track the job: http://Master.Hadoop:8088/proxy/application_1396936838233_0005/ 14/04/09 16:23:47 INFO mapreduce.Job: Running job: job_1396936838233_0005 14/04/09 16:23:55 INFO mapreduce.Job: Job job_1396936838233_0005 running in uber mode : false 14/04/09 16:23:55 INFO mapreduce.Job: map 0% reduce 0% 14/04/09 16:24:05 INFO mapreduce.Job: map 33% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: map 100% reduce 0% 14/04/09 16:24:12 INFO mapreduce.Job: Job job_1396936838233_0005 completed successfully 14/04/09 16:24:12 INFO mapreduce.Job: Counters: 27 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=354636 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=295 HDFS: Number of bytes written=0 HDFS: Number of read operations=3 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Launched map tasks=3 Other local map tasks=3 Total time spent by all maps in occupied slots (ms)=35297 Total time spent by all reduces in occupied slots (ms)=0 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=295 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=381 CPU time spent (ms)=11050 Physical memory (bytes) snapshot=543367168 Virtual memory (bytes) snapshot=3918925824 Total committed heap usage (bytes)=156958720 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=0 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 29.7126 seconds (0 bytes/sec) 14/04/09 16:24:12 INFO mapreduce.ImportJobBase: Retrieved 3 records.

hbase(main):009:0> list

TABLE

demo_sqoop2hbase

table_02

table_03

test_table

xyz

5 row(s) in 0.0310 seconds

=> ["demo_sqoop2hbase", "table_02", "table_03", "test_table", "xyz"]

hbase(main):010:0> scan "demo_sqoop2hbase"

ROW COLUMN+CELL

1 column=url:blog, timestamp=1397031850700, value=micmiu.com

2 column=url:blog, timestamp=1397031844106, value=ctosun.com

3 column=url:blog, timestamp=1397031849888, value=baby.micmiu.com

3 row(s) in 0.0730 seconds

hbase(main):011:0> describe "demo_sqoop2hbase"

DESCRIPTION ENABLED

'demo_sqoop2hbase', {NAME => 'url', DATA_BLOCK_ENCODING => 'NONE', BL true

OOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRE

SSION => 'NONE', MIN_VERSIONS => '0', TTL => '2147483647', KEEP_DELET

ED_CELLS => 'false', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOC

KCACHE => 'true'}

1 row(s) in 0.0580 seconds

hbase(main):012:0>- http://sqoop.apache.org/docs/1.4.4/SqoopUserGuide.html

原文地址:Sqoop安装配置及演示, 感谢原作者分享。

ホットAIツール

Undresser.AI Undress

リアルなヌード写真を作成する AI 搭載アプリ

AI Clothes Remover

写真から衣服を削除するオンライン AI ツール。

Undress AI Tool

脱衣画像を無料で

Clothoff.io

AI衣類リムーバー

Video Face Swap

完全無料の AI 顔交換ツールを使用して、あらゆるビデオの顔を簡単に交換できます。

人気の記事

ホットツール

メモ帳++7.3.1

使いやすく無料のコードエディター

SublimeText3 中国語版

中国語版、とても使いやすい

ゼンドスタジオ 13.0.1

強力な PHP 統合開発環境

ドリームウィーバー CS6

ビジュアル Web 開発ツール

SublimeText3 Mac版

神レベルのコード編集ソフト(SublimeText3)

ホットトピック

1665

1665

14

14

1424

1424

52

52

1322

1322

25

25

1270

1270

29

29

1249

1249

24

24

Win11システムに中国語言語パックをインストールできない問題の解決策

Mar 09, 2024 am 09:48 AM

Win11システムに中国語言語パックをインストールできない問題の解決策

Mar 09, 2024 am 09:48 AM

Win11 システムに中国語言語パックをインストールできない問題の解決策 Windows 11 システムの発売に伴い、多くのユーザーは新しい機能やインターフェイスを体験するためにオペレーティング システムをアップグレードし始めました。ただし、一部のユーザーは、アップグレード後に中国語の言語パックをインストールできず、エクスペリエンスに問題が発生したことに気づきました。この記事では、Win11 システムに中国語言語パックをインストールできない理由について説明し、ユーザーがこの問題を解決するのに役立ついくつかの解決策を提供します。原因分析 まず、Win11 システムの機能不全を分析しましょう。

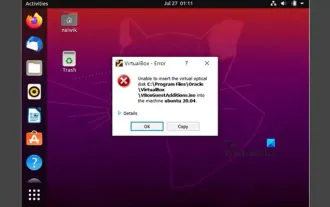

VirtualBox にゲスト追加機能をインストールできない

Mar 10, 2024 am 09:34 AM

VirtualBox にゲスト追加機能をインストールできない

Mar 10, 2024 am 09:34 AM

OracleVirtualBox の仮想マシンにゲスト追加をインストールできない場合があります。 [デバイス] > [InstallGuestAdditionsCDImage] をクリックすると、以下に示すようなエラーがスローされます。 VirtualBox - エラー: 仮想ディスク C: プログラミング ファイルOracleVirtualBoxVBoxGuestAdditions.iso を ubuntu マシンに挿入できません この投稿では、次の場合に何が起こるかを理解します。 VirtualBox にゲスト追加機能をインストールできません。 VirtualBox にゲスト追加機能をインストールできない Virtua にインストールできない場合

Baidu Netdisk は正常にダウンロードされたものの、インストールできない場合はどうすればよいですか?

Mar 13, 2024 pm 10:22 PM

Baidu Netdisk は正常にダウンロードされたものの、インストールできない場合はどうすればよいですか?

Mar 13, 2024 pm 10:22 PM

Baidu Netdisk のインストール ファイルを正常にダウンロードしたにもかかわらず、正常にインストールできない場合は、ソフトウェア ファイルの整合性にエラーがあるか、残っているファイルとレジストリ エントリに問題がある可能性があります。 Baidu Netdisk はダウンロードできましたが、インストールできない問題の分析を紹介します。 Baidu Netdisk は正常にダウンロードされたがインストールできない問題の分析 1. インストール ファイルの整合性を確認します。ダウンロードしたインストール ファイルが完全で、破損していないことを確認します。再度ダウンロードするか、別の信頼できるソースからインストール ファイルをダウンロードしてみてください。 2. ウイルス対策ソフトウェアとファイアウォールをオフにする: ウイルス対策ソフトウェアやファイアウォール プログラムによっては、インストール プログラムが正常に実行されない場合があります。ウイルス対策ソフトウェアとファイアウォールを無効にするか終了してから、インストールを再実行してください。

Linux Bashrc の機能、構成、使用法を理解する

Mar 20, 2024 pm 03:30 PM

Linux Bashrc の機能、構成、使用法を理解する

Mar 20, 2024 pm 03:30 PM

Linux Bashrc について: 機能、構成、および使用法 Linux システムでは、Bashrc (BourneAgainShellruncommands) は非常に重要な構成ファイルであり、システムの起動時に自動的に実行されるさまざまなコマンドと設定が含まれています。 Bashrc ファイルは通常、ユーザーのホーム ディレクトリにある隠しファイルであり、その機能はユーザーの Bashshell 環境をカスタマイズすることです。 1. Bashrc関数の設定環境

Android アプリを Linux にインストールするにはどうすればよいですか?

Mar 19, 2024 am 11:15 AM

Android アプリを Linux にインストールするにはどうすればよいですか?

Mar 19, 2024 am 11:15 AM

Linux への Android アプリケーションのインストールは、多くのユーザーにとって常に懸念事項であり、特に Android アプリケーションを使用したい Linux ユーザーにとって、Android アプリケーションを Linux システムにインストールする方法をマスターすることは非常に重要です。 Linux 上で Android アプリケーションを直接実行するのは Android プラットフォームほど簡単ではありませんが、エミュレータやサードパーティのツールを使用すれば、Linux 上で Android アプリケーションを快適に楽しむことができます。ここでは、Linux システムに Android アプリケーションをインストールする方法を紹介します。

Ubuntu 24.04 に Podman をインストールする方法

Mar 22, 2024 am 11:26 AM

Ubuntu 24.04 に Podman をインストールする方法

Mar 22, 2024 am 11:26 AM

Docker を使用したことがある場合は、デーモン、コンテナー、およびそれらの機能を理解する必要があります。デーモンは、コンテナがシステムですでに使用されているときにバックグラウンドで実行されるサービスです。 Podman は、Docker などのデーモンに依存せずにコンテナーを管理および作成するための無料の管理ツールです。したがって、長期的なバックエンド サービスを必要とせずにコンテナーを管理できるという利点があります。さらに、Podman を使用するにはルートレベルの権限は必要ありません。このガイドでは、Ubuntu24 に Podman をインストールする方法について詳しく説明します。システムを更新するには、まずシステムを更新し、Ubuntu24 のターミナル シェルを開く必要があります。インストールプロセスとアップグレードプロセスの両方で、コマンドラインを使用する必要があります。シンプルな

Ubuntu 24.04 に Ubuntu Notes アプリをインストールして実行する方法

Mar 22, 2024 pm 04:40 PM

Ubuntu 24.04 に Ubuntu Notes アプリをインストールして実行する方法

Mar 22, 2024 pm 04:40 PM

高校で勉強しているときに、同じクラスの他の生徒よりも多くのメモを取る、非常に明確で正確なメモを取る生徒もいます。メモをとることが趣味である人もいますが、重要なことについての小さな情報をすぐに忘れてしまうため、メモをとることが必需品である人もいます。 Microsoft の NTFS アプリケーションは、通常の講義以外にも重要なメモを保存したい学生にとって特に役立ちます。この記事では、Ubuntu24へのUbuntuアプリケーションのインストールについて説明します。 Ubuntu システムの更新 Ubuntu インストーラーをインストールする前に、Ubuntu24 では、新しく構成されたシステムが更新されていることを確認する必要があります。 Ubuntu システムでは最も有名な「a」を使用できます

Win7システムにGo言語をインストールするにはどうすればよいですか?

Mar 27, 2024 pm 01:42 PM

Win7システムにGo言語をインストールするにはどうすればよいですか?

Mar 27, 2024 pm 01:42 PM

Win7 システムに Go 言語をインストールするのは比較的簡単な操作で、次の手順に従ってください。以下では、Win7 システムに Go 言語をインストールする方法を詳しく紹介します。ステップ 1: Go 言語のインストール パッケージをダウンロードする. まず、Go 言語の公式 Web サイト (https://golang.org/) を開いて、ダウンロード ページに入ります。ダウンロード ページで、Win7 システムと互換性のあるインストール パッケージのバージョンを選択してダウンロードします。 [ダウンロード] ボタンをクリックし、インストール パッケージがダウンロードされるまで待ちます。ステップ 2: Go 言語をインストールする