How SpringBoot integrates dataworks

Notes

The test here is mainly to call the script pulled from dataworks and store it locally.

The script contains two parts

1. The developed odps script (obtained through OpenApi) 2. The table statement script (connect to maxCompute through dataworks information to obtain the creation statement)

Alibaba Cloud Dataworks OpenApi paging query limit, a maximum of 100 queries at a time. We need to query in multiple pages when pulling the script

This project uses MaxCompute's SDK/JDBC connection, and SpringBoot operates the MaxCompute SDK/JDBC connection

Integration implementation

Main implementation It is to write a tool class. If necessary, it can be configured as a SpringBean and injected into the container.

Dependency introduction

<properties>

<java.version>1.8</java.version>

<!--maxCompute-sdk-版本号-->

<max-compute-sdk.version>0.40.8-public</max-compute-sdk.version>

<!--maxCompute-jdbc-版本号-->

<max-compute-jdbc.version>3.0.1</max-compute-jdbc.version>

<!--dataworks版本号-->

<dataworks-sdk.version>3.4.2</dataworks-sdk.version>

<aliyun-java-sdk.version>4.5.20</aliyun-java-sdk.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!--max compute sdk-->

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>odps-sdk-core</artifactId>

<version>${max-compute-sdk.version}</version>

</dependency>

<!--max compute jdbc-->

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>odps-jdbc</artifactId>

<version>${max-compute-jdbc.version}</version>

<classifier>jar-with-dependencies</classifier>

</dependency>

<!--dataworks需要引入aliyun-sdk和dataworks本身-->

<dependency>

<groupId>com.aliyun</groupId>

<artifactId>aliyun-java-sdk-core</artifactId>

<version>${aliyun-java-sdk.version}</version>

</dependency>

<dependency>

<groupId>com.aliyun</groupId>

<artifactId>aliyun-java-sdk-dataworks-public</artifactId>

<version>${dataworks-sdk.version}</version>

</dependency>

</dependencies>Request parameter class writing

/**

* @Description

* @Author itdl

* @Date 2022/08/09 15:12

*/

@Data

public class DataWorksOpenApiConnParam {

/**

* 区域 eg. cn-shanghai

*/

private String region;

/**

* 访问keyId

*/

private String aliyunAccessId;

/**

* 密钥

*/

private String aliyunAccessKey;

/**

* 访问端点 就是API的URL前缀

*/

private String endPoint;

/**

* 数据库类型 如odps

*/

private String datasourceType;

/**

* 所属项目

*/

private String project;

/**

* 项目环境 dev prod

*/

private String projectEnv;

}Tool class writing

Basic class preparation, callback function after pulling the script

Why is the callback function needed? Because all scripts are pulled, if the results of each paging are merged, it will cause memory overflow, and the callback function is used Just add a processing function in each cycle

/**

* @Description

* @Author itdl

* @Date 2022/08/09 15:12

*/

@Data

public class DataWorksOpenApiConnParam {

/**

* 区域 eg. cn-shanghai

*/

private String region;

/**

* 访问keyId

*/

private String aliyunAccessId;

/**

* 密钥

*/

private String aliyunAccessKey;

/**

* 访问端点 就是API的URL前缀

*/

private String endPoint;

/**

* 数据库类型 如odps

*/

private String datasourceType;

/**

* 所属项目

*/

private String project;

/**

* 项目环境 dev prod

*/

private String projectEnv;

}Initialization operation

It is mainly to instantiate the client information of the dataworks openApi interface and initialize the tool class for maxCompute connection (including JDBC and SDK methods)

private static final String MAX_COMPUTE_JDBC_URL_FORMAT = "http://service.%s.maxcompute.aliyun.com/api";

/**默认的odps接口地址 在Odps中也可以看到该变量*/

private static final String defaultEndpoint = "http://service.odps.aliyun.com/api";

/**

* dataworks连接参数

*

*/

private final DataWorksOpenApiConnParam connParam;

/**

* 可以使用dataworks去连接maxCompute 如果连接的引擎是maxCompute的话

*/

private final MaxComputeJdbcUtil maxComputeJdbcUtil;

private final MaxComputeSdkUtil maxComputeSdkUtil;

private final boolean odpsSdk;

/**

* 客户端

*/

private final IAcsClient client;

public DataWorksOpenApiUtil(DataWorksOpenApiConnParam connParam, boolean odpsSdk) {

this.connParam = connParam;

this.client = buildClient();

this.odpsSdk = odpsSdk;

if (odpsSdk){

this.maxComputeJdbcUtil = null;

this.maxComputeSdkUtil = buildMaxComputeSdkUtil();

}else {

this.maxComputeJdbcUtil = buildMaxComputeJdbcUtil();

this.maxComputeSdkUtil = null;

}

}

private MaxComputeSdkUtil buildMaxComputeSdkUtil() {

final MaxComputeSdkConnParam param = new MaxComputeSdkConnParam();

// 设置账号密码

param.setAliyunAccessId(connParam.getAliyunAccessId());

param.setAliyunAccessKey(connParam.getAliyunAccessKey());

// 设置endpoint

param.setMaxComputeEndpoint(defaultEndpoint);

// 目前只处理odps的引擎

final String datasourceType = connParam.getDatasourceType();

if (!"odps".equals(datasourceType)){

throw new BizException(ResultCode.DATA_WORKS_ENGINE_SUPPORT_ERR);

}

// 获取项目环境,根据项目环境连接不同的maxCompute

final String projectEnv = connParam.getProjectEnv();

if ("dev".equals(projectEnv)){

// 开发环境dataworks + _dev就是maxCompute的项目名

param.setProjectName(String.join("_", connParam.getProject(), projectEnv));

}else {

// 生产环境dataworks的项目名和maxCompute一致

param.setProjectName(connParam.getProject());

}

return new MaxComputeSdkUtil(param);

}

private MaxComputeJdbcUtil buildMaxComputeJdbcUtil() {

final MaxComputeJdbcConnParam param = new MaxComputeJdbcConnParam();

// 设置账号密码

param.setAliyunAccessId(connParam.getAliyunAccessId());

param.setAliyunAccessKey(connParam.getAliyunAccessKey());

// 设置endpoint

param.setEndpoint(String.format(MAX_COMPUTE_JDBC_URL_FORMAT, connParam.getRegion()));

// 目前只处理odps的引擎

final String datasourceType = connParam.getDatasourceType();

if (!"odps".equals(datasourceType)){

throw new BizException(ResultCode.DATA_WORKS_ENGINE_SUPPORT_ERR);

}

// 获取项目环境,根据项目环境连接不同的maxCompute

final String projectEnv = connParam.getProjectEnv();

if ("dev".equals(projectEnv)){

// 开发环境dataworks + _dev就是maxCompute的项目名

param.setProjectName(String.join("_", connParam.getProject(), projectEnv));

}else {

// 生产环境dataworks的项目名和maxCompute一致

param.setProjectName(connParam.getProject());

}

return new MaxComputeJdbcUtil(param);

}Call OpenApi to pull all scripts

/**

* 根据文件夹路径分页查询该路径下的文件(脚本)

* @param pageSize 每页查询多少数据

* @param folderPath 文件所在目录

* @param userType 文件所属功能模块 可不传

* @param fileTypes 设置文件代码类型 逗号分割 可不传

*/

public void listAllFiles(Integer pageSize, String folderPath, String userType, String fileTypes, CallBack.FileCallBack callBack) throws ClientException {

pageSize = setPageSize(pageSize);

// 创建请求

final ListFilesRequest request = new ListFilesRequest();

// 设置分页参数

request.setPageNumber(1);

request.setPageSize(pageSize);

// 设置上级文件夹

request.setFileFolderPath(folderPath);

// 设置区域和项目名称

request.setSysRegionId(connParam.getRegion());

request.setProjectIdentifier(connParam.getProject());

// 设置文件所属功能模块

if (!ObjectUtils.isEmpty(userType)){

request.setUseType(userType);

}

// 设置文件代码类型

if (!ObjectUtils.isEmpty(fileTypes)){

request.setFileTypes(fileTypes);

}

// 发起请求

ListFilesResponse res = client.getAcsResponse(request);

// 获取分页总数

final Integer totalCount = res.getData().getTotalCount();

// 返回结果

final List<ListFilesResponse.Data.File> resultList = res.getData().getFiles();

// 计算能分几页

long pages = totalCount % pageSize == 0 ? (totalCount / pageSize) : (totalCount / pageSize) + 1;

// 只有1页 直接返回

if (pages <= 1){

callBack.handle(resultList);

return;

}

// 第一页执行回调

callBack.handle(resultList);

// 分页数据 从第二页开始查询 同步拉取,可以优化为多线程拉取

for (int i = 2; i <= pages; i++) {

//第1页

request.setPageNumber(i);

//每页大小

request.setPageSize(pageSize);

// 发起请求

res = client.getAcsResponse(request);

final List<ListFilesResponse.Data.File> tableEntityList = res.getData().getFiles();

if (!ObjectUtils.isEmpty(tableEntityList)){

// 执行回调函数

callBack.handle(tableEntityList);

}

}

}Internal connection to MaxCompute to pull all DDL script content

DataWorks tool class code, processed through callback function

/**

* 获取所有的DDL脚本

* @param callBack 回调处理函数

*/

public void listAllDdl(CallBack.DdlCallBack callBack){

if (odpsSdk){

final List<TableMetaInfo> tableInfos = maxComputeSdkUtil.getTableInfos();

for (TableMetaInfo tableInfo : tableInfos) {

final String tableName = tableInfo.getTableName();

final String sqlCreateDesc = maxComputeSdkUtil.getSqlCreateDesc(tableName);

callBack.handle(tableName, sqlCreateDesc);

}

}

}MaxCompute tool Class code, get the table creation statement based on the table name, taking the SDK as an example, JDBC directly executes show create table to get the table creation statement

/**

* 根据表名获取建表语句

* @param tableName 表名

* @return

*/

public String getSqlCreateDesc(String tableName) {

final Table table = odps.tables().get(tableName);

// 建表语句

StringBuilder mssqlDDL = new StringBuilder();

// 获取表结构

TableSchema tableSchema = table.getSchema();

// 获取表名表注释

String tableComment = table.getComment();

//获取列名列注释

List<Column> columns = tableSchema.getColumns();

/*组装成mssql的DDL*/

// 表名

mssqlDDL.append("CREATE TABLE IF NOT EXISTS ");

mssqlDDL.append(tableName).append("\n");

mssqlDDL.append(" (\n");

//列字段

int index = 1;

for (Column column : columns) {

mssqlDDL.append(" ").append(column.getName()).append("\t\t").append(column.getTypeInfo().getTypeName());

if (!ObjectUtils.isEmpty(column.getComment())) {

mssqlDDL.append(" COMMENT '").append(column.getComment()).append("'");

}

if (index == columns.size()) {

mssqlDDL.append("\n");

} else {

mssqlDDL.append(",\n");

}

index++;

}

mssqlDDL.append(" )\n");

//获取分区

List<Column> partitionColumns = tableSchema.getPartitionColumns();

int partitionIndex = 1;

if (!ObjectUtils.isEmpty(partitionColumns)) {

mssqlDDL.append("PARTITIONED BY (");

}

for (Column partitionColumn : partitionColumns) {

final String format = String.format("%s %s COMMENT '%s'", partitionColumn.getName(), partitionColumn.getTypeInfo().getTypeName(), partitionColumn.getComment());

mssqlDDL.append(format);

if (partitionIndex == partitionColumns.size()) {

mssqlDDL.append("\n");

} else {

mssqlDDL.append(",\n");

}

partitionIndex++;

}

if (!ObjectUtils.isEmpty(partitionColumns)) {

mssqlDDL.append(")\n");

}

// mssqlDDL.append("STORED AS ALIORC \n");

// mssqlDDL.append("TBLPROPERTIES ('comment'='").append(tableComment).append("');");

mssqlDDL.append(";");

return mssqlDDL.toString();

}Test code

public static void main(String[] args) throws ClientException {

final DataWorksOpenApiConnParam connParam = new DataWorksOpenApiConnParam();

connParam.setAliyunAccessId("您的阿里云账号accessId");

connParam.setAliyunAccessKey("您的阿里云账号accessKey");

// dataworks所在区域

connParam.setRegion("cn-chengdu");

// dataworks所属项目

connParam.setProject("dataworks所属项目");

// dataworks所属项目环境 如果不分环境的话设置为生产即可

connParam.setProjectEnv("dev");

// 数据引擎类型 odps

connParam.setDatasourceType("odps");

// ddataworks接口地址

connParam.setEndPoint("dataworks.cn-chengdu.aliyuncs.com");

final DataWorksOpenApiUtil dataWorksOpenApiUtil = new DataWorksOpenApiUtil(connParam, true);

// 拉取所有ODPS脚本

dataWorksOpenApiUtil.listAllFiles(100, "", "", "10", files -> {

// 处理文件

for (ListFilesResponse.Data.File file : files) {

final String fileName = file.getFileName();

System.out.println(fileName);

}

});

// 拉取所有表的建表语句

dataWorksOpenApiUtil.listAllDdl((tableName, tableDdlContent) -> {

System.out.println("=======================================");

System.out.println("表名:" + tableName + "内容如下:\n");

System.out.println(tableDdlContent);

System.out.println("=======================================");

});

}Test results

test_001 script

test_002 script

test_003 script

test_004 script

test_005 script

==================== ====================

Table name: test_abc_info content is as follows:CREATE TABLE IF NOT EXISTS test_abc_info

(

test_abc1 STRING COMMENT 'Field 1',

test_abc2 STRING COMMENT 'Field 2',

test_abc3 STRING COMMENT 'Field 3',

test_abc4 STRING COMMENT 'Field 4',

test_abc5 STRING COMMENT 'Field 5' ,

test_abc6 STRING COMMENT 'field 6',

test_abc7 STRING COMMENT 'field 7',

test_abc8 STRING COMMENT 'field 8'

)

PARTITIONED BY (p_date STRING COMMENT 'data date'

)

;

==========================================

Disconnected from the target VM, address: '127.0.0.1:59509', transport: 'socket'

The above is the detailed content of How SpringBoot integrates dataworks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1670

1670

14

14

1428

1428

52

52

1329

1329

25

25

1274

1274

29

29

1256

1256

24

24

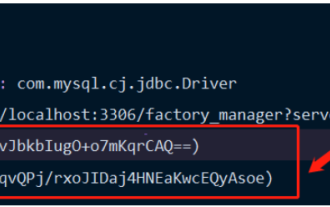

How Springboot integrates Jasypt to implement configuration file encryption

Jun 01, 2023 am 08:55 AM

How Springboot integrates Jasypt to implement configuration file encryption

Jun 01, 2023 am 08:55 AM

Introduction to Jasypt Jasypt is a java library that allows a developer to add basic encryption functionality to his/her project with minimal effort and does not require a deep understanding of how encryption works. High security for one-way and two-way encryption. , standards-based encryption technology. Encrypt passwords, text, numbers, binaries... Suitable for integration into Spring-based applications, open API, for use with any JCE provider... Add the following dependency: com.github.ulisesbocchiojasypt-spring-boot-starter2. 1.1Jasypt benefits protect our system security. Even if the code is leaked, the data source can be guaranteed.

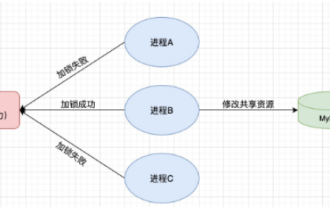

How to use Redis to implement distributed locks in SpringBoot

Jun 03, 2023 am 08:16 AM

How to use Redis to implement distributed locks in SpringBoot

Jun 03, 2023 am 08:16 AM

1. Redis implements distributed lock principle and why distributed locks are needed. Before talking about distributed locks, it is necessary to explain why distributed locks are needed. The opposite of distributed locks is stand-alone locks. When we write multi-threaded programs, we avoid data problems caused by operating a shared variable at the same time. We usually use a lock to mutually exclude the shared variables to ensure the correctness of the shared variables. Its scope of use is in the same process. If there are multiple processes that need to operate a shared resource at the same time, how can they be mutually exclusive? Today's business applications are usually microservice architecture, which also means that one application will deploy multiple processes. If multiple processes need to modify the same row of records in MySQL, in order to avoid dirty data caused by out-of-order operations, distribution needs to be introduced at this time. The style is locked. Want to achieve points

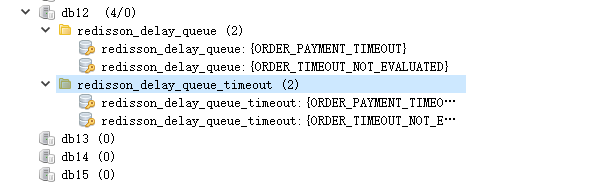

How SpringBoot integrates Redisson to implement delay queue

May 30, 2023 pm 02:40 PM

How SpringBoot integrates Redisson to implement delay queue

May 30, 2023 pm 02:40 PM

Usage scenario 1. The order was placed successfully but the payment was not made within 30 minutes. The payment timed out and the order was automatically canceled. 2. The order was signed and no evaluation was conducted for 7 days after signing. If the order times out and is not evaluated, the system defaults to a positive rating. 3. The order is placed successfully. If the merchant does not receive the order for 5 minutes, the order is cancelled. 4. The delivery times out, and push SMS reminder... For scenarios with long delays and low real-time performance, we can Use task scheduling to perform regular polling processing. For example: xxl-job Today we will pick

How to solve the problem that springboot cannot access the file after reading it into a jar package

Jun 03, 2023 pm 04:38 PM

How to solve the problem that springboot cannot access the file after reading it into a jar package

Jun 03, 2023 pm 04:38 PM

Springboot reads the file, but cannot access the latest development after packaging it into a jar package. There is a situation where springboot cannot read the file after packaging it into a jar package. The reason is that after packaging, the virtual path of the file is invalid and can only be accessed through the stream. Read. The file is under resources publicvoidtest(){Listnames=newArrayList();InputStreamReaderread=null;try{ClassPathResourceresource=newClassPathResource("name.txt");Input

How to implement Springboot+Mybatis-plus without using SQL statements to add multiple tables

Jun 02, 2023 am 11:07 AM

How to implement Springboot+Mybatis-plus without using SQL statements to add multiple tables

Jun 02, 2023 am 11:07 AM

When Springboot+Mybatis-plus does not use SQL statements to perform multi-table adding operations, the problems I encountered are decomposed by simulating thinking in the test environment: Create a BrandDTO object with parameters to simulate passing parameters to the background. We all know that it is extremely difficult to perform multi-table operations in Mybatis-plus. If you do not use tools such as Mybatis-plus-join, you can only configure the corresponding Mapper.xml file and configure The smelly and long ResultMap, and then write the corresponding sql statement. Although this method seems cumbersome, it is highly flexible and allows us to

Comparison and difference analysis between SpringBoot and SpringMVC

Dec 29, 2023 am 11:02 AM

Comparison and difference analysis between SpringBoot and SpringMVC

Dec 29, 2023 am 11:02 AM

SpringBoot and SpringMVC are both commonly used frameworks in Java development, but there are some obvious differences between them. This article will explore the features and uses of these two frameworks and compare their differences. First, let's learn about SpringBoot. SpringBoot was developed by the Pivotal team to simplify the creation and deployment of applications based on the Spring framework. It provides a fast, lightweight way to build stand-alone, executable

How SpringBoot customizes Redis to implement cache serialization

Jun 03, 2023 am 11:32 AM

How SpringBoot customizes Redis to implement cache serialization

Jun 03, 2023 am 11:32 AM

1. Customize RedisTemplate1.1, RedisAPI default serialization mechanism. The API-based Redis cache implementation uses the RedisTemplate template for data caching operations. Here, open the RedisTemplate class and view the source code information of the class. publicclassRedisTemplateextendsRedisAccessorimplementsRedisOperations, BeanClassLoaderAware{//Declare key, Various serialization methods of value, the initial value is empty @NullableprivateRedisSe

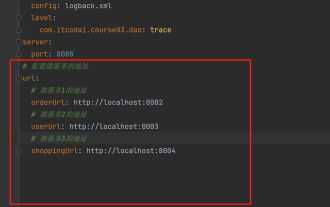

How to get the value in application.yml in springboot

Jun 03, 2023 pm 06:43 PM

How to get the value in application.yml in springboot

Jun 03, 2023 pm 06:43 PM

In projects, some configuration information is often needed. This information may have different configurations in the test environment and the production environment, and may need to be modified later based on actual business conditions. We cannot hard-code these configurations in the code. It is best to write them in the configuration file. For example, you can write this information in the application.yml file. So, how to get or use this address in the code? There are 2 methods. Method 1: We can get the value corresponding to the key in the configuration file (application.yml) through the ${key} annotated with @Value. This method is suitable for situations where there are relatively few microservices. Method 2: In actual projects, When business is complicated, logic